Once combined via linking, further thinking and writing, they can be released as novel ideas for everyone to use.

Once combined via linking, further thinking and writing, they can be released as novel ideas for everyone to use.

I separate collecting from processing because I don’t hack everything that pops up in my mind while I read a text into my computer. Instead, I take notes on paper.

Creativity is just connecting things. When you ask creative people how they did something, they feel a little guilty because they didn’t really do it, they just saw something. It seemed obvious to them after a while.

—Steve Jobs (via lifehacker and Zettel no. 201308301352) ❧

in other words, it’s just statistical thermodynamics. Eventually small pieces will float by each other and stick together in new and hopefully interesting ways. The more particles you’ve got and the more you can potentially connect or link things, the better off you’ll be.

Annotated on March 23, 2020 at 04:36PM

Getting ready for the possibility of major disruptions is not only smart; it’s also our civic duty

Last year, the California Attorney General held a tense press conference at a tiny elementary school in the one working class, black neighborhood of the mostly wealthy and white Marin County. His office had concluded that the local district "knowingly and intentionally" maintained a segregated school, violating the 14th amendment. He ordered them to fix it, but for local officials and families, the path forward remains unclear, as is the question: what does "equal protection" mean?

- Eric Foner is author of The Second Founding

Hosted by Kai Wright. Reported by Marianne McCune.

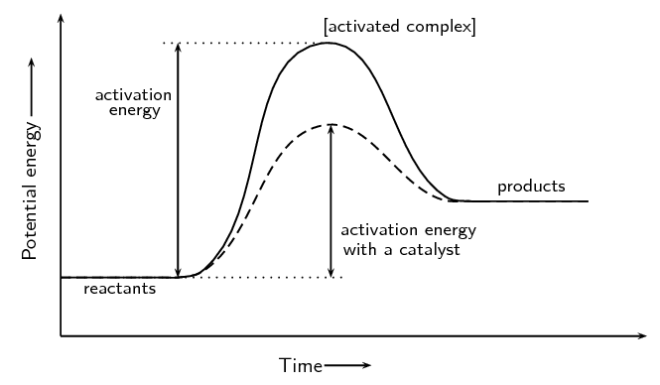

I’m reminded of endothermic chemical reactions that take a reasonably high activation energy (an input cost), but one that is worth it in the end because it raises the level of all the participants to a better and higher level in the end. When are we going to realize that doing a little bit of hard work today will help us all out in the longer run? I’m hopeful that shows like this can act as a catalyst to lower the amount of energy that gets us all to a better place.

This Marin county example is interesting because it is so small and involves two schools. The real trouble comes in larger communities like Pasadena, where I live, which have much larger populations where the public schools are suffering while the dozens and dozens of private schools do far better. Most people probably don’t realize it, but we’re still suffering from the heavy effects of racism and busing from the early 1970’s.

All this makes me wonder if we could apply some math (topology and statistical mechanics perhaps) to these situations to calculate a measure of equity and equality for individual areas to find a maximum of some sort that would satisfy John Rawls’ veil of ignorance in better designing and planning our communities. Perhaps the difficulty may be in doing so for more broad and dense areas that have been financially gerrymandered for generations by redlining and other problems.

I can only think about how we’re killing ourselves as individuals and as a nation. The problem seems like individual choices for smoking and our long term health care outcomes or for individual consumption and its broader effects on global warming. We’re ignoring the global maximums we could be achieving (where everyone everywhere has improved lives) in the search for personal local maximums. Most of these things are not zero sum games, but sadly we feel like they must be and actively work against both our own and our collective best interests.

Maxwell’s Demon is a famous thought experiment in which a mischievous imp uses knowledge of the velocities of gas molecules in a box to decrease the entropy of the gas, which could then be used to do useful work such as pushing a piston. This is a classic example of converting information (what the gas molecules are doing) into work. But of course that kind of phenomenon is much more widespread — it happens any time a company or organization hires someone in order to take advantage of their know-how. César Hidalgo has become an expert in this relationship between information and work, both at the level of physics and how it bubbles up into economies and societies. Looking at the world through the lens of information brings new insights into how we learn things, how economies are structured, and how novel uses of data will transform how we live.

César Hidalgo received his Ph.D. in physics from the University of Notre Dame. He currently holds an ANITI Chair at the University of Toulouse, an Honorary Professorship at the University of Manchester, and a Visiting Professorship at Harvard’s School of Engineering and Applied Sciences. From 2010 to 2019, he led MIT’s Collective Learning group. He is the author of Why Information Grows and co-author of The Atlas of Economic Complexity. He is a co-founder of Datawheel, a data visualization company whose products include the Observatory of Economic Complexity.

I was also piqued at the mention of Lynne Kelly’s work, which I’m now knee deep into. I suspect it could dramatically expand on what we think of as the capacity of a personbyte, though the limit of knowledge there still exists. The idea of mnemotechniques within indigenous cultures certainly expands on the way knowledge worked in prehistory and what we classically think of and frame collective knowledge or collective learning.

I also think there are some interesting connections with Dr. Kelly’s mentions of social equity in prehistorical cultures and the work that Hidalgo mentions in the middle of the episode.

There are a small handful of references I’ll want to delve into after hearing this, though it may take time to pull them up unless they’re linked in the show notes.

hat-tip: Complexity Digest for the reminder that this is in my podcatcher. 🔖 November 22, 2019 at 03:28PM

When you’re young, you’re generally in school(s) where you’re around people exactly your age, generally close to your socio-economic status, and with many of the same feelings, thoughts, and aspirations. You’re literally surrounded by hundreds (or sometimes thousands) who are so very similar to you. Once you’re out of college, it’s far harder to find this type of environment and this is what makes it seem so much harder to find good friends. In adulthood almost everyone you’re surrounded by are dramatically different from you and that makes it harder to find things you have in common. In the end it’s really the statistical mechanics that are working against you.

To work against this one needs to be more flexible and broad in what one is looking for in companionship, but generally the older one gets the less flexible one becomes.

Statistical physics is the natural framework to model complex networks. In the last twenty years, it has brought novel physical insights on a variety of emergent phenomena, such as self-organisation, scale invariance, mixed distributions and ensemble non-equivalence, which cannot be deduced from the behaviour of the individual constituents. At the same time, thanks to its deep connection with information theory, statistical physics and the principle of maximum entropy have led to the definition of null models reproducing some features of empirical networks, but otherwise as random as possible. We review here the statistical physics approach for complex networks and the null models for the various physical problems, focusing in particular on the analytic frameworks reproducing the local features of the network. We show how these models have been used to detect statistically significant and predictive structural patterns in real-world networks, as well as to reconstruct the network structure in case of incomplete information. We further survey the statistical physics frameworks that reproduce more complex, semi-local network features using Markov chain Monte Carlo sampling, and the models of generalised network structures such as multiplex networks, interacting networks and simplicial complexes.

Comments: To appear on Nature Reviews Physics. The revised accepted version will be posted 6 months after publication

Say you could make a thousand digital replicas of yourself – should you? What happens when you want to get rid of them?

Complex networks describe a wide range of systems in nature and society. Frequently cited examples include the cell, a network of chemicals linked by chemical reactions, and the Internet, a network of routers and computers connected by physical links. While traditionally these systems have been modeled as random graphs, it is increasingly recognized that the topology and evolution of real networks are governed by robust organizing principles. This article reviews the recent advances in the field of complex networks, focusing on the statistical mechanics of network topology and dynamics. After reviewing the empirical data that motivated the recent interest in networks, the authors discuss the main models and analytical tools, covering random graphs, small-world and scale-free networks, the emerging theory of evolving networks, and the interplay between topology and the network's robustness against failures and attacks.

During decades the study of networks has been divided between the efforts of social scientists and natural scientists, two groups of scholars who often do not see eye to eye. In this review I present an effort to mutually translate the work conducted by scholars from both of these academic fronts hoping to continue to unify what has become a diverging body of literature. I argue that social and natural scientists fail to see eye to eye because they have diverging academic goals. Social scientists focus on explaining how context specific social and economic mechanisms drive the structure of networks and on how networks shape social and economic outcomes. By contrast, natural scientists focus primarily on modeling network characteristics that are independent of context, since their focus is to identify universal characteristics of systems instead of context specific mechanisms. In the following pages I discuss the differences between both of these literatures by summarizing the parallel theories advanced to explain link formation and the applications used by scholars in each field to justify their approach to network science. I conclude by providing an outlook on how these literatures can be further unified.

Social scientists focus on explaining how context specific social and economic mechanisms drive the structure of networks and on how networks shape social and economic outcomes. By contrast, natural scientists focus primarily on modeling network characteristics that are independent of context, since their focus is to identify universal characteristics of systems instead of context specific mechanisms. ❧

August 25, 2018 at 10:18PM

Science and Complexity (Weaver 1948); explained the three eras that according to him defined the history of science. These were the era of simplicity, disorganized complexity, and organized complexity. In the eyes of Weaver what separated these three eras was the development of mathematical tools allowing scholars to describe systems of increasing complexity. ❧

August 25, 2018 at 10:19PM

Problems of disorganized complexity are problems that can be described using averages and distributions, and that do not depend on the identity of the elements involved in a system, or their precise patterns of interactions. A classic example of a problem of disorganized complexity is the statistical mechanics of Ludwig Boltzmann, James-Clerk Maxwell, and Willard Gibbs, which focuses on the properties of gases. ❧

August 25, 2018 at 10:20PM

Soon after Weaver’s paper, biologists like Francois Jacob (Jacob and Monod 1961), (Jacob et al. 1963) and Stuart Kaufmann (Kauffman 1969), developed the idea of regulatory networks. Mathematicians like Paul Erdos and Alfred Renyi, advanced graph theory (Erdős and Rényi 1960) while Benoit Mandelbrot worked on Fractals (Mandelbrot and Van Ness 1968), (Mandelbrot 1982). Economists like Thomas Schelling (Schelling 1960) and Wasily Leontief (Leontief 1936), (Leontief 1936), respectively explored self-organization and input-output networks. Sociologists, like Harrison White (Lorrain and White 1971) and Mark Granovetter (Granovetter 1985), explored social networks, while psychologists like Stanley Milgram (Travers and Milgram 1969) explored the now famous small world problem. ❧

Some excellent references

August 25, 2018 at 10:24PM

First, I will focus in these larger groups because reviews that transcend the boundary between the social and natural sciences are rare, but I believe them to be valuable. One such review is Borgatti et al. (2009), which compares the network science of natural and social sciences arriving at a similar conclusion to the one I arrived. ❧

August 25, 2018 at 10:27PM

Links are the essence of networks. So I will start this review by comparing the mechanisms used by natural and social scientists to explain link formation. ❧

August 25, 2018 at 10:32PM

When connecting the people that acted in the same movie, natural scientists do not differentiate between people in leading or supporting roles. ❧

But they should because it’s not often the case that these are relevant unless they are represented by the same agent or agency.

August 25, 2018 at 10:51PM

For instance, in the study of mobile phone networks, the frequency and length of interactions has often been used as measures of link weight (Onnela et al. 2007), (Hidalgo and Rodriguez-Sickert 1008), (Miritello et al. 2011). ❧

And they probably shouldn’t because typically different levels of people are making these decisions. Studio brass and producers typically have more to say about the lead roles and don’t care as much about the smaller ones which are overseen by casting directors or sometimes the producers. The only person who has oversight of all of them is the director, and even then they may quit caring at some point.

August 25, 2018 at 10:52PM

Social scientists explain link formation through two families of mechanisms; one that finds it roots in sociology and the other one in economics. The sociological approach assumes that link formation is connected to the characteristics of individuals and their context. Chief examples of the sociological approach include what I will call the big three sociological link-formation hypotheses. These are: shared social foci, triadic closure, and homophily. ❧

August 25, 2018 at 10:55PM

The social foci hypothesis predicts that links are more likely to form among individuals who, for example, are classmates, co-workers, or go to the same gym (they share a social foci). The triadic closure hypothesis predicts that links are more likely to form among individuals that share “friends” or acquaintances. Finally, the homophily hypothesis predicts that links are more likely to form among individuals who share social characteristics, such as tastes, cultural background, or physical appearance (Lazarsfeld and Merton 1954), (McPherson et al. 2001). ❧

definitions of social foci, triadic closure, and homophily within network science.

August 26, 2018 at 11:39AM

Yet, strategic games look for equilibrium in the formation and dissolution of ties in the context of the game theory advanced first by (Von Neumann et al. 2007), and later by (Nash 1950). ❧

August 25, 2018 at 10:58PM

Preferential attachment is the idea that connectivity begets connectivity. ❧

August 25, 2018 at 10:59PM

Preferential attachment is an idea advanced originally by the statisticians John Willis and Udny Yule in (Willis and Yule 1922), but has been rediscovered numerous times during the twentieth century. ❧

August 25, 2018 at 11:00PM

Rediscoveries of this idea in the twentieth century include the work of (Simon 1955) (who did cite Yule), (Merton 1968), (Price 1976) (who studied citation networks), and (Barabási and Albert 1999), who published the modern reference for this model, which is now widely known as the Barabasi-Albert model. ❧

August 25, 2018 at 11:01PM

preferential attachment. In the eyes of the social sciences, however, understanding which of all of these hypotheses drives the formation of the network is what one needs to explore. ❧

For example what drives attachment of political candidates?

August 26, 2018 at 08:15AM

Finally it is worth noting that trust, through the theory of social capital, has been connected with long-term economic growth—even though these results are based on regressions using extremely sparse datasets. ❧

And this is an example of how Trump is hurting the economy.

August 26, 2018 at 08:33AM

Nevertheless, the evidence suggests that social capital and social institutions are significant predictors of economic growth, after controlling for the effects of human capital and initial levels of income (Knack and Keefer 1997), (Knack 2002).4 So trust is a relevant dimension of social interactions that has been connected to individual dyads, network formation, labor markets, and even economic growth. ❧

August 26, 2018 at 08:35AM

Social scientist, on the other hand, have focused on what ties are more likely to bring in new information, which are primarily weak ties (Granovetter 1973), and on why weak ties bring new information (because they bridge structural holes (Burt 2001), (Burt 2005)). ❧

August 26, 2018 at 09:45AM

heterogeneous networks have been found to be effective promoters of the evolution of cooperation, since there are advantages to being a cooperator when you are a hub, and hubs tend to stabilize networks in equilibriums where levels of cooperation are high (Ohtsuki et al. 2006), (Pacheco et al. 2006), (Lieberman et al. 2005), (Santos and Pacheco 2005). ❧

August 26, 2018 at 09:49AM

These results, however, have also been challenged by human experiments finding no such effect (Gracia-Lázaro et al. 2012). The study of cooperation in networks has also been performed in dynamic settings, where individuals are allowed to cut ties (Wang et al. 2012), promoting cooperation, and are faced with different levels of knowledge about the reputation of peers in their network (Gallo and Yan 2015). Moreover, cooperating behavior has seen to spread when people change the networks where they participate in (Fowler and Christakis 2010). ❧

Open questions

August 26, 2018 at 09:50AM

https://boffosocko.com/2018/05/01/episode-06-my-little-hundred-million-revisionist-history/

I got a ton of requests for this.... A time lapse for every hit of Ichiro's @mlb career. pic.twitter.com/w8uhzlSnp0

— Daren Willman (@darenw) May 6, 2018

I once taught an 8 am college class. So many grandparents died that semester. I then moved my class to 3 pm. No more deaths. And that, my friends, is how I save lives.

— Viorica Marian (@VioricaMarian1) May 5, 2018

In 1984, Elvis Costello released what he would say later was his worst record: Goodbye Cruel World. Among the most discordant songs on the album was the forgettable “The Deportees Club.” But then, years later, Costello went back and re-recorded it as “Deportee,” and today it stands as one of his most sublime achievements.

“Hallelujah” is about the role that time and iteration play in the production of genius, and how some of the most memorable works of art had modest and undistinguished births.

The bigger idea here of immediate genius versus “slow cooked” genius is the fun one to contemplate. I’ve previously heard stories about Mozart’s composing involved his working things out in his head and then later putting them on paper much the same way that a “cow pees” (i.e. all in one quick go or a fast flood.)

Another interesting thing I find here is the insanely small probability that the chain of events that makes the song popular actually happens. It seems worthwhile to look at the statistical mechanics of the production of genius. Perhaps applying Ridley’s concepts of “Ideas having sex” and Dawkin’s “meme theory” (aka selfish gene) could be interestingly useful. What does the state space of genius look like?